Neural Network Lab Test Instructions

You may use this as a test for debugging if you wish. Note that the toolkit is not designed to allow prediction of multiple outputs, so rather than pass in this dataset as you would normally via the commandline to the toolkit, it is intended only as an example for debugging and would require manually hardcoding the topology and weights to match this example.

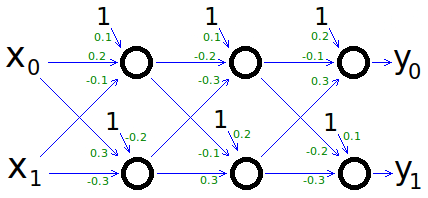

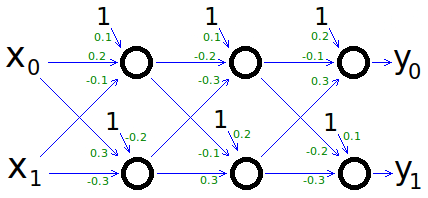

1- Construct a neural network with two hidden layers.

2- Write a special method to initialize the weights with the values shown in this diagram, instead of with small random values:

3- Load this simple training dataset:

@RELATION neural_net_test

@ATTRIBUTE x1 continuous

@ATTRIBUTE x2 continuous

@ATTRIBUTE y1 continuous

@ATTRIBUTE y2 continuous

@DATA

0.3,0.7,0.1,1.0

4- Write a method that will print the weights of your network. (Relative to the figure above, the weights should be printed in a top-to-bottom, left-to-right order.) If you want exact comparisons, print your double-precision floating point values to 10 decimal places. (In C++, call "cout.precision(10);" before printing the values.)

5- Perform three epochs of training. (Since the dataset only contains one pattern, an epoch is just one pattern presentation.) Use a learning rate of 0.1, and a momentum term of 0.

Before each pattern presentation, print the weights. After you forward-propagate the input vector, print the predicted output vector.

After you back-propagate the error, print the error values assigned to each network unit.

6- Compare your printed values with these. Your values should match to at least 10 decimal places. If your numbers are off, you have a bug.

Weights:

0.1, 0.2, -0.1,

-0.2, 0.3, -0.3,

0.1, -0.2, -0.3,

0.2, -0.1, 0.3,

0.2, -0.1, 0.3,

0.1, -0.2, -0.3

Input vector: 0.3, 0.7

Target output: 0.1, 1

Forward propagating...

Predicted output: 0.5802212893, 0.4591181386

Back propagating...

Error values:

0.0006498826879, -0.001076309464

-0.003775554904, -0.01849687375

-0.1169648797, 0.1343164751

Descending gradient...

Weights:

0.1000649883, 0.2000194965, -0.09995450821,

-0.2001076309, 0.2999677107, -0.3000753417,

0.09962244451, -0.200197267, -0.3001588284,

0.1981503126, -0.1009664336, 0.2992218814,

0.188303512, -0.1054666053, 0.2933556532,

0.1134316475, -0.1937224306, -0.2923699726

Input vector: 0.3, 0.7

Target output: 0.1, 1

Forward propagating...

Predicted output: 0.5757806352, 0.4643173074

Back propagating...

Error values:

0.0006201909322, -0.001060640521

-0.003374132411, -0.01792982084

-0.1162128911, 0.1332386127

Descending gradient...

Weights:

0.1001270074, 0.2000381022, -0.09991109485,

-0.200213695, 0.2999358915, -0.3001495865,

0.09928503127, -0.200373569, -0.300300756,

0.1963573305, -0.1019032854, 0.2984676916,

0.1766822229, -0.1108965022, 0.2867617159,

0.1267555088, -0.1874970289, -0.2848099931