Chapter 3 Data Objects, Packages, and Datasets

"In God we trust. All others [must] have data."

- Edwin R. Fisher, cancer pathologist

3.1 Data Storage Objects

There are five primary types of data storage objects in R. These are: (atomic) vectors, matrices, arrays, dataframes, and lists1.

3.1.1 Atomic Vectors

Atomic vectors contain data with order and length, but no dimension. This is clearly different from the linear algebra conception of a vector. In linear algebra, a row vector with \(n\) elements has dimension \(1 \times n\) (1 row and \(n\) columns), whereas a column vector has dimension \(n \times 1\).

We can create atomic vectors with the function c, which means combine.

[1] TRUE[1] 3NULLWe can add a names attribute to vector elements. For example,

a b c

1 2 3 $names

[1] "a" "b" "c"[1] "a" "b" "c"

The function as.matrix(x) (see Section 3.3.2) can be used to coerce x to have a matrix structure with dimension \(3 \times 1\) (3 rows and 1 column). Thus, in R a matrix has dimension, but a vector does not.

[1] 3 1Recall (Section 2.3.4) that an object’s base type defines the (R internal) type or storage mode of any object. Recall further that the 25 base types include "integer", "double", "complex", and "character". Elements of vectors must have a single data storage mode. Thus, a vector cannot contain both numeric and character data.

Importantly, when an element-wise operation is applied to two unequal length vectors, R will generate a warning and automatically recycle elements of the shorter vector. For instance:

Warning in c(1, 2, 3) + c(1, 0, 4, 5, 13): longer object length is not a

multiple of shorter object length[1] 2 2 7 6 15In this case, the result of the addition of the two vectors is: \(1 + 1, 2 + 0, 3 + 4, 1 + 5\), and \(2 + 13\). Thus, the first two elements in the first object are recycled in the vector-wise addition.

3.1.2 Matrices

Matrices are two-dimensional (row and column) data structures whose elements all have the same data storage mode (typically "double").

The function matrix() can be used to create matrices.

[,1] [,2]

[1,] 1 3

[2,] 2 2Note that matrix() enters data “by column.” That is, the first two entries in the data argument are placed in column one, and the last two entries are placed in column two. One can enter data “by row” by adding the argument byrow = TRUE.

[,1] [,2]

[1,] 1 2

[2,] 3 2Matrix algebra operations can be applied directly to R matrices (Table 3.1). More complex matrix analyses are also possible, including eigenanalysis (function eigen()), and single value, QR, and Cholesky decompositions (the functions eigen(), svd(), chol(), respectively).

| Operator | Operation | To find: | We type: |

|---|---|---|---|

t() |

Matrix transpose | \(\boldsymbol{A}^T\) | t(A) |

%*% |

Matrix multiply | \(\boldsymbol{A} \cdot \boldsymbol{A}\) | A%*%A |

det() |

Determinant | \(Det(\boldsymbol{A})\) | det(A) |

solve() |

Matrix inverse | \(\boldsymbol{A}^{-1}\) | solve(A) |

Example 3.1 \(\text{}\)

The matrix A, defined above, has the form:

\[\boldsymbol{A} = \begin{bmatrix}

1 & 3\\

2 & 2

\end{bmatrix}.\]

Consider the operations:

[,1] [,2]

[1,] 1 2

[2,] 3 2 [,1] [,2]

[1,] 7 9

[2,] 6 10[1] -4 [,1] [,2]

[1,] -0.5 0.75

[2,] 0.5 -0.25\(\blacksquare\)

We can use the function cbind() to combine vectors into matrix columns,

a b

[1,] 1 2

[2,] 2 3

[3,] 3 4and use the function rbind() to combine vectors into matrix rows.

[,1] [,2] [,3]

a 1 2 3

b 2 3 43.1.3 Arrays

Arrays are one, two dimensional (matrix), or three or more dimensional data structures whose elements contain a single type of data. Thus, while all matrices are arrays, not all arrays are matrices.

[1] "matrix" "array" As with matrices, elements in arrays can have only one data storage mode.

[1] "double"The function array() can be used to create arrays. The first argument in array() defines the data. The second argument is a vector that defines both the number of dimensions (this will be the length of the vector), and the number of levels in each dimension (numbers in dimension elements).

Example 3.2 \(\text{}\)

Here is a \(2 \times 2 \times 2\) array:

, , 1

[,1] [,2]

[1,] 1 3

[2,] 2 4

, , 2

[,1] [,2]

[1,] 5 7

[2,] 6 8[1] "array"\(\blacksquare\)

3.1.4 Dataframes

Dataframes are two-dimensional structures whose columns can have different data storage modes (e.g., quantitative and categorical). The function data.frame() can be used to create dataframes.

numeric non.numeric

1 1 a

2 2 b

3 3 c[1] "data.frame"Because of the possibility of different data storage modes for distinct columns, the data storage mode of a dataframe is "list". Specifically, a dataframe is a list, whose storage elements are columns.

[1] "list"A names attribute will exist for each dataframe column2.

[1] "numeric" "non.numeric"The $ operator allows access to dataframe columns.

[1] "a" "b" "c"The function attach() allows R to recognize column names of a dataframe as global variables.

[1] "a" "b" "c"The function detach() is the programming inverse of attach().

Error in eval(expr, envir, enclos): object 'non.numeric' not foundThe functions rm() and remove() will entirely remove any R-object, including a vector, matrix, or dataframe from a session. To remove all objects from the workspace one can use rm(list=ls()) or (in RStudio) the “broom” button in the environments and history panel3.

A safer alternative to attach() is the function with(). Using with() eliminates concerns about multiple variables with the same name becoming mixed up in functions. This is because the variable names for a dataframe specified in with() will not be permanently attached in an R-session.

[1] "a" "b" "c"3.1.5 Lists

Lists are often used to contain miscellaneous associated objects. Like dataframes, lists need not use a single data storage mode. Unlike dataframes, however, lists can include objects that are not two-dimensional or with different data classes including character strings (i.e., units of character objects), multiple matrices and dataframes with varying dimensionality, and even other lists. The function list() can be used to create lists.

$a

[1] 1 2 3

$b

[1] "this.is.a.list"[1] "list"[1] "list"Objects in lists can be called using the $ operator. Here is the character string b from ldata.

[1] "this.is.a.list"We note that to create an R-object containing character strings, we need to place quotation marks around entries.

[1] "low" "med" "high"The function str attempts to display the internal structure of an R object. It is extremely useful for succinctly displaying the contents of complex objects like lists.

List of 2

$ a: num [1:3] 1 2 3

$ b: chr "this.is.a.list"We are told that ldata1 is a list containing two objects: a sequence of numbers from 1 to 3, and a character string.

The function do.call() is useful for large scale manipulations of data storage objects. For example, what if you had a list containing multiple dataframes with the same column names that you wanted to bind together?

ldata2 <- list(df1 = data.frame(lo.temp = c(-1,3,5), high.temp = c(78, 67, 90)),

df2 = data.frame(lo.temp = c(-4,3,7), high.temp = c(75, 87, 80)),

df3 = data.frame(lo.temp = c(-0,2), high.temp = c(70, 80)))You could do something like:

lo.temp high.temp

df1.1 -1 78

df1.2 3 67

df1.3 5 90

df2.1 -4 75

df2.2 3 87

df2.3 7 80

df3.1 0 70

df3.2 2 80Or what if I wanted to replicate the df3 dataframe from ldata above, by binding it onto the bottom of itself three times? I could do something like:

lo.temp high.temp

1 0 70

2 2 80

3 0 70

4 2 80

5 0 70

6 2 80Note the use of the function replicate().

3.2 Boolean Operations

Computer operations that dichotomously classify true and false statements are called logical or Boolean. In R, a Boolean operation will always return one of the values TRUE or FALSE. R logical operators are listed in Table 3.2.

| Operator | Operation | To ask: | We type: |

|---|---|---|---|

> |

\(>\) | Is x greater than y? |

x > y |

>= |

\(\geq\) | Is x greater than or equal to y? |

x >= y |

< |

\(<\) | Is x less than y? |

x < y |

<= |

\(\leq\) | Is x less than or equal to y |

x <= y |

== |

\(=\) | Is x equal to y? |

x == y |

!= |

\(\neq\) | Is x not equal to y? |

x != y |

& |

and | Do x and y equal z? |

x & y == z |

&& |

and (control flow) | Do x and y equal z? |

x && y == z |

| | | or | Do x or y equal z? |

x | y == z |

| || | or (control flow) | Do x or y equal z? |

x || y == z |

Note that there are two ways to specify “and” (& and &&), and two ways to specify “or” (| and ||). The longer forms of “and” and “or” evaluate queries from left to right, stopping when a result is determined. Thus, this form is more appropriate for programming control-flow operations.

Example 3.3

For demonstration purposes, here is a simple dataframe:

dframe <- data.frame(

Age = c(18,22,23,21,22,19,18,18,19,21),

Sex = c("M","M","M","M","M","F","F","F","F","F"),

Weight_kg = c(63.5,77.1,86.1,81.6,70.3,49.8,54.4,59.0,65,69)

)

dframe Age Sex Weight_kg

1 18 M 63.5

2 22 M 77.1

3 23 M 86.1

4 21 M 81.6

5 22 M 70.3

6 19 F 49.8

7 18 F 54.4

8 18 F 59.0

9 19 F 65.0

10 21 F 69.0The R logical operator for equals is == (Table 3.2). Thus, to identify Age outcomes equal to 21 we type:

[1] FALSE FALSE FALSE TRUE FALSE FALSE FALSE FALSE FALSE TRUEThe unary operator for “not” is ! (Table 3.2). Thus, to identify Age outcomes not equal to 21 we could type:

[1] TRUE TRUE TRUE FALSE TRUE TRUE TRUE TRUE TRUE FALSEMultiple Boolean queries can be made. Here we identify Age data less than 19, or equal to 21.

[1] TRUE FALSE FALSE TRUE FALSE FALSE TRUE TRUE FALSE TRUEQueries can involve multiple variables. For instance, here we identify males less than or equal to 21 years old that weigh less than 80 kg.

[1] TRUE FALSE FALSE TRUE FALSE FALSE FALSE FALSE FALSE FALSE\(\blacksquare\)

3.3 Testing and Coercing Classes

We have already considered functions for identifying the class of an arbitrary R object, foo. These include class(foo) and attr(foo, "class") (Section 2.3.3). We have also considered approaches for identifying the base type of an object, including typeof(foo) (Section 2.3.4). This section considers methods for identifying object membership in particular classes, and coercing an object’s class membership.

3.3.1 Testing

Functions exist to logically test for object membership to major R classes. These functions generally begin with an .is prefix and include: is.matrix(), is.array(), is.list(), is.factor(), is.double(), is.integer() is.numeric(), is.character(), and many others.

The Boolean function is.numeric() can be used to test if an object or an object’s components behave like numbers4.

[1] TRUE[1] TRUEThus, x contains numbers stored with double precision. However,

[1] FALSEData objects with categorical entries can be created using the function factor(). In statistics the term “factor” refers to a categorical variable whose categories (factor levels) are likely replicated as treatments in an experimental design.

[1] 1 2 3 4

Levels: 1 2 3 4[1] TRUEThe R class factor streamlines many analytical processes, including summarization of a quantitative variable with respect to a factor and specifying interactions of two or more factors. Here we see the interaction of levels in x with levels in another factor, y.

[1] 1.a 2.b 3.c 4.d

16 Levels: 1.a 2.a 3.a 4.a 1.b 2.b 3.b 4.b 1.c 2.c 3.c 4.c 1.d 2.d ... 4.dSixteen interactions are possible, although only four actually occur when simultaneously considering x and y.

To decrease memory usage5, objects of class factor have an unexpected base type:

[1] "integer"Despite this designation, and the fact that categories in x are distinguished using numbers, the entries in x do not have a numerical meaning and cannot be evaluated mathematically.

[1] FALSEWarning in Ops.factor(x, 5): '+' not meaningful for factors[1] NA NA NA NAOccasionally an ordering of categorical levels is desirable. For instance, assume that we wish to apply three different imprecise temperature treatments "low", "med" and "high" in an experiment with six experimental units. While we do not know the exact temperatures of these levels, we know that "med" is hotter than "low" and "high" is hotter than "med". To provide this categorical ordering we can use factor(data, ordered = TRUE) or the function ordered().

x <- factor(c("med","low","high","high","med","low"),

levels = c("low","med","high"),

ordered = TRUE)

x[1] med low high high med low

Levels: low < med < high[1] TRUE[1] TRUEThe levels argument in factor() specifies the correct ordering of levels.

The function ifelse() can be applied to atomic vectors or one dimensional arrays (e.g., rows or columns) to evaluate a logical argument and provide particular outcomes if the argument is TRUE or FALSE. The function requires three arguments.

- The first argument,

test, gives the logical test to be evaluated. - The second argument,

yes, provides the output if the test is true. - The third argument,

no, provides the output if the test is false.

For instance:

[1] "Young" "Not so young" "Not so young" "Not so young"

[5] "Not so young" "Young" "Young" "Young"

[9] "Young" "Not so young"A more generalized approach to providing a condition and then defining the consequences (often used in functions) uses the commands if and else, potentially in combination with the functions any() and all(). For instance:

[1] "Young"and

[1] "Not so Young".

3.3.2 Coercion

Objects can be switched from one class to another using coercion functions that begin with an as. prefix6. Analogues to the testing (.is) functions listed above are:

as.matrix(), as.array(), as.list(), as.factor(), as.double(), as.integer() as.numeric(), and as.character().

For instance, a non-factor object can be coerced to have class factor with the function as.factor().

[1] FALSE[1] TRUECoercion may result in removal and addition of attributes. For instance conversion from atomic vector to matrix below results in the loss of the names attribute.

eulers_num log_exp pi

2.718282 1.000000 3.141593 [1] "eulers_num" "log_exp" "pi" NULLCoercion may also have unexpected results. Here NAs result when attempting to coerce a object with apparent mixed storage modes to class numeric.

Warning: NAs introduced by coercion[1] NA NA 103.3.3 NA

R identifies missing values (empty cells) as NA, which means “not available.” Hence, the R function to identify missing values is is.na().

For example:

[1] FALSE FALSE FALSE FALSE TRUE FALSE FALSEConversely, to identify outcomes that are not missing, I would use the “not” operator to specify !is.na().

[1] TRUE TRUE TRUE TRUE FALSE TRUE TRUEThere are a number of R functions to get rid of missing values. These include na.omit().

[1] 2 3 1 2 3 2

attr(,"na.action")

[1] 5

attr(,"class")

[1] "omit"We see that R dropped the missing observation and then told us which observation was omitted (observation number 5).

Functions in R often, but not always, have built in capacities to handle missing data, for instance, by calling na.omit(). Consider the following dataframe which provides plant percent cover data for four plant species at two sites. Plant species are identified with four letter codes, consisting of the first two letters of the Linnaean genus and species names.

field.data <- data.frame(ACMI = c(12, 13), ELSC = c(0, 4), CAEL = c(NA, 2),

CAPA = c(20, 30), TACE = c(0, 2))

row.names(field.data) <- c("site1", "site2")

field.data ACMI ELSC CAEL CAPA TACE

site1 12 0 NA 20 0

site2 13 4 2 30 2The function complete.cases() checks for completeness of the data in rows of a data array.

[1] FALSE TRUEIf na.omit() is applied in this context, the entire row containing the missing observation will be dropped.

ACMI ELSC CAEL CAPA TACE

site2 13 4 2 30 2Unfortunately, this means that information about the other four species at site one will lost. Thus, it is generally more rational to remove NA values while retaining non-missing values. For instance, many statistical functions have to capacity to base summaries on non-NA data.

Warning in mean.default(field.data[1, ], na.rm = T): argument is not

numeric or logical: returning NA[1] NA3.3.4 NaN

The designation NaN is associated with the current conventions of the IEEE 754-2008 arithmetic used by R. It means “not a number.” Mathematical operations which produce NaN include:

[1] NaN[1] NaNWarning in sin(Inf): NaNs produced[1] NaN3.3.5 NULL

In object oriented programming, a null object has no referenced value or has a defined neutral behavior (Wikipedia 2023). Occasionally one may wish to specify that an R object is NULL. For example, a NULL object can be included as an argument in a function without requiring that it has a particular value or meaning. As with NA and NaN, the NULL specification is easy.

It should be emphasized that R-objects or elements within objects that are NA, NaN or NULL cannot be identified with the Boolean operators == or !=. For instance:

logical(0)[1] NAInstead one should use is.na(), is.nan() or is.null() to identify NA, NaN or NULL components, respectively.

[1] TRUE[1] FALSE[1] TRUE[1] FALSE

3.4 Accessing and Subsetting Data With []

One can subset data storage objects using square bracket operators, i.e., [], along with a variety of functions7. Because of their simplicity, I focus on square brackets for subsetting here. Gaining skills with square brackets will greatly enhance your ability to manipulate datasets in R. As toy datasets here are an atomic vector (with a names attribute), a matrix, a three dimensional array, a dataframe, and a list:

a b c

1 2 3 [,1] [,2]

[1,] 1 3

[2,] 2 4, , 1

[,1] [,2]

[1,] 1 3

[2,] 2 4

, , 2

[,1] [,2]

[1,] 5 7

[2,] 6 8 numeric non.numeric

1 1 a

2 2 b

3 3 c$element1

[1] 1 2 3

$element2

[1] "this.is.a.list"

To obtain the \(i\)th component from an atomic vector, matrix, array, dataframe or list named foo we would specify foo[i]. For instance, here is the first component of our toy data objects:

a

1 [1] 1[1] 1 numeric

1 1

2 2

3 3$element1

[1] 1 2 3Importantly, dataframes and lists view their \(i\)th element as the \(i\)th column and the \(i\)th list element, respectively.

We can also apply double square brackets, i.e., [[]] to list-type objects, i.e., atomic vectors and explicit lists, with similar results. Note, however, that the data subsets are now missing their name attributes.

[1] 1[1] 1 2 3If a data storage object has a names attribute, then a name can be placed in square brackets to obtain corresponding data.

numeric

1 1

2 2

3 3The advantage of square brackets over $ in this an application is that several components can be specified simultaneously using the former approach:

non.numeric numeric

1 a 1

2 b 2

3 c 3If foo has a row \(\times\) column structure, i.e., a matrix, array, or dataframe, we could obtain the \(i\)th column from foo using foo[,i] (or foo[[i]]) and the \(j\)th row from foo using foo[j,]. For instance, here is the second column from mdat, and the first row from ddat.

[1] 3 4 numeric non.numeric

1 1 aThe element from foo corresponding to row j and column i can be accessed using: foo[j, i], or foo[,i][j], or foo[j,][i].

[1] 3[1] 3[1] 3Arrays may require more than two indices. For instance, for a three dimensional array, foo, the specification foo[,j,i] will return the entirety of the \(j\)th column in the \(i\)th component of the outermost dimension of foo, whereas foo[k,j,i] will return the \(k\)th element from the \(j\)th column in the \(i\)th component of the outermost dimension of foo.

[1] 3 4[1] 3[1] 4Ranges or particular subsets of elements from a data storage object can also be selected. For instance, here I access rows two and three of ddat:

numeric non.numeric

2 2 b

3 3 cI can drop data object components by using negative integers in square brackets. Here I obtain an identical result to the example above by dropping row one from ddat:

numeric non.numeric

2 2 b

3 3 cHere I obtain ddat rows one and three in two different ways:

numeric non.numeric

1 1 a

3 3 c numeric non.numeric

1 1 a

3 3 cSquare braces can also be used to rearrange data components:

numeric non.numeric

3 3 c

1 1 a

2 2 bDuplicate components:

$element2

[1] "this.is.a.list"

$element2

[1] "this.is.a.list"Or even replace data components:

numeric non.numeric

1 1 d

2 2 e

3 3 f3.4.1 Subsetting a Factor

Importantly, the factor level structure of a factor will remain intact even if one or more of the levels are entirely removed.

[1] d e f

Levels: d e f[1] e f

Levels: d e fNote that the level a remains a characteristic of fdat, even though the cell containing the lone observation of a was removed from the dataset. This outcome is allowed because it is desirable for certain analytical situations (e.g, summarizations that acknowledge missing data for some levels). To remove levels that no longer occur in a factor, we can use the function droplevels().

[1] e f

Levels: e f3.4.2 Subsetting with Boolean Operators

Boolean (TRUE or FALSE) outcomes can be used in combination with square brackets to subset data. Consider the dataframe used earlier to demonstrate logical commands.

dframe <- data.frame(

Age = c(18,22,23,21,22,19,18,18,19,21),

Sex = c("M","M","M","M","M","F","F","F","F","F"),

Weight_kg = c(63.5,77.1,86.1,81.6,70.3,49.8,54.4,59.0,65,69)

)Here we extract Age outcomes less than or equal to 21.

[1] 18 21 19 18 18 19 21We could also use this information to obtain entire rows of the dataframe.

Age Sex Weight_kg

1 18 M 63.5

4 21 M 81.6

6 19 F 49.8

7 18 F 54.4

8 18 F 59.0

9 19 F 65.0

10 21 F 69.03.4.3 When Subset Is Larger Than Underlying Data

R allows one to make a data subset larger than underlying data itself, although this results in the generation of filler NAs. Consider the following example:

The atomic vector x has length five. If I ask for a subset of length seven, I get:

[1] -2 3 4 6 45 NA NA3.4.4 Subsetting with upper.tri(), lower.tri(), and diag()

We can use square brackets alongside the functions upper.tri(), lower.tri(), and diag() to examine the upper triangle, lower triangle, and diagonal parts of a matrix, respectively.

[,1] [,2] [,3]

[1,] 1 2 5

[2,] 2 4 1

[3,] 3 3 4[1] 2 5 1[1] 2 3 3[1] 1 4 4Note that upper.tri() and lower.tri() are used identify the appropriate triangle in the object mat. Subsetting is then accomplished using square brackets.

3.5 Packages

An R package contains a set of related functions, documentation, and (often) data files that have been bundled together. The so-called R-distribution packages are included with a conventional download of R (Table 3.3). These packages are directly controlled by the R core development team and are extremely well-vetted and trustworthy.

Packages in Table 3.4 constitute the R-recommended packages. These are not necessarily controlled by the R core development team, but are also extremely useful, well-tested, and stable, and like the R-distribution packages, are included in conventional downloads of R.

Aside from distribution and recommended packages, there are a large number of contributed packages that have been created by R-users (\(> 20000\) as of 9/12/2023). Table 3.5 lists a few.

3.5.1 Package Installation

Contributed packages can be installed from CRAN (the Comprehensive R Archive Network). To do this, one can go to Packages\(>\)Install package(s) on the R-GUI toolbar, and choose a nearby CRAN mirror site to minimize download time (non-Unix only). Once a mirror site is selected, the packages available at the site will appear. One can simply click on the desired packages to install them. Packages can also be downloaded directly from the command line using install.packages("package name"). Thus, to install the package vegan (see Table 3.5), I would simply type:

If local web access is not available, packages can be installed as compressed (.zip, .tar) files which can then be placed manually on a workstation by inserting the package files into the library folder within the top level R directory, or into a path-defined R library folder in a user directory.

The installation pathway for contributed packages can be identified using .libPath().

[1] "C:/Users/ahoken/AppData/Local/R/win-library/4.4"

[2] "C:/Program Files/R/R-4.4.2/library" This process can be facilitated in RStudio via the plots and files (see Section 2.9).

Several functions exist for updating packages and for comparing currently installed versions packages with their latest versions on CRAN or other repositories. The function old.packages() indicates which currently installed packages which have a (suitable) later version. Here are a few of the packages I have installed that have later versions.

Package Installed Built ReposVer

ape "ape" "5.8" "4.4.1" "5.8-1"

askpass "askpass" "1.2.0" "4.4.1" "1.2.1"

bit "bit" "4.0.5" "4.4.1" "4.5.0.1"

bit64 "bit64" "4.0.5" "4.4.1" "4.5.2"

bookdown "bookdown" "0.39" "4.4.0" "0.41"

broom "broom" "1.0.6" "4.4.1" "1.0.7" The function update.packages() will identify, and offer to download and install later versions of installed packages.

3.5.2 Loading Packages

Once a contributed package is installed on a computer it never needs to be re-installed. However, for use in an R session, recommended packages, and installed contributed packages will need to be loaded. This can be done using the library() function, or point and click tools if one is using RStudio. For example, to load the installed contributed vegan package, I would type:

Loading required package: permuteLoading required package: latticeThis is vegan 2.6-8We see that two other packages are loaded when we load vegan: permute and lattice.

To detach vegan from the global environment, I would type:

We can check if a specific package is loaded using the function .packages(). Most of the R distribution packages are loaded (by default) upon opening a session. Exceptions include compiler, grid, parallel, splines, stats4, and tools.

bpack <- c("base", "compiler", "datasets", "grDevices", "graphics",

"grid", "methods", "parallel", "splines", "stats", "stats4",

"tcltk", "tools", "translations", "utils")

sapply(bpack, function(x) (x %in% .packages())) base compiler datasets grDevices graphics

TRUE FALSE TRUE TRUE TRUE

grid methods parallel splines stats

FALSE TRUE FALSE FALSE TRUE

stats4 tcltk tools translations utils

FALSE TRUE FALSE FALSE TRUE The function sapply(), which allows application of a function to each element in a vector or list, is formally introduced in Section 4.1.1.

The package vegan is no longer loaded because we applied detach(package:vegan) earlier.

[1] FALSE

We can get a summary of information about a session, including details about the version of R being used, the underlying computer platform, and the loaded packages with the function sessionInfo().

[1] "R version 4.4.2 (2024-10-31 ucrt)"[1] "Windows 10 x64 (build 17134)"[1] "nlme" "cli" "rlang" "xfun" "jsonlite" "deSolve" This information is important to include when reporting issues to package maintainers.

Once a package is installed its functions can generally be accessed using the double colon metacharacter, ::, even if the package is not actually loaded. For instance, the function vegan::diversity() will allow access to the function diversity() from vegan, even when vegan is not loaded.

[1] function (x, index = "shannon", groups, equalize.groups = FALSE,

[2] MARGIN = 1, base = exp(1)) The triple colon metacharacter, :::, can be used to access internal package functions. These functions, however, are generally kept internal for good reason, and probably shouldn’t be used outside of the context of the rest of the package.

3.5.3 Other Package Repositories

Aside from CRAN, there are currently three other extensive repositories of R packages. First, the Bioconductor project (http://www.bioconductor.org/packages/release/Software/html) contains a large number of packages for the analysis of data from current and emerging biological assays. Bioconductor packages are generally not stored at CRAN. Packages can be downloaded from bioconductor using an R script called biocLite. To access the script and download the package RCytoscape from Biocondctor, I could type:

Second, the Posit Package Manager (formerly the RStudio Package Manager) provides a repository interface for R packages from CRAN, Bioconductor, and packages for the Python system (see Section 9.5). Third, R-forge (http://r-forge.r-project.org/) contains releases of packages that have not yet been implemented into CRAN, and other miscellaneous code. Bioconductor, Posit, and R-forge can be specified as repositories from Packages\(>\)Select Repositories in the R-GUI (non-Unix only). Other informal R package and code repositories currently include GitHub and Zenodo.

| Package | Maintainer | Topic(s) addressed by package | Author/Citation |

|---|---|---|---|

| base | R Core Team | Base R functions | R Core Team (2023) |

| compiler | R Core Team | R byte code compiler | R Core Team (2023) |

| datasets | R Core Team | Base R datasets | R Core Team (2023) |

| grDevices | R Core Team | Devices for base and grid graphics | R Core Team (2023) |

| graphics | R Core Team | R functions for base graphics | R Core Team (2023) |

| grid | R Core Team | Grid graphics layout capabilities | R Core Team (2023) |

| methods | R Core Team | Formal methods and classes for R objects | R Core Team (2023) |

| parallel | R Core Team | Support for parallel computation | R Core Team (2023) |

| splines | R Core Team | Regression spline functions and classes | R Core Team (2023) |

| stats | R Core Team | R statistical functions | R Core Team (2023) |

| stats4 | R Core Team | Statistical functions with S4 classes | R Core Team (2023) |

| tcltk | R Core Team | Language bindings to Tcl/Tk | R Core Team (2023) |

| tools | R Core Team | Tools forpackage development/administration | R Core Team (2023) |

| utils | R Core Team | R utility functions | R Core Team (2023) |

| Package | Maintainer | Topic(s) addressed by package | Author/Citation |

|---|---|---|---|

| KernSmooth | B. Ripley | Kernel smoothing | Wand (2023) |

| MASS | B. Ripley | Important statistical methods | Venables and Ripley (2002) |

| Matrix | M. Maechler | Classes and methods for matrices | Bates, Maechler, and Jagan (2023) |

| boot | B. Ripley | Bootstrapping | Canty and Ripley (2022) |

| class | B. Ripley | Classification | Venables and Ripley (2002) |

| cluster | M. Maechler | Cluster analysis | Maechler et al. (2022) |

| codetools | S. Wood | Code analysis tools | Tierney (2023) |

| foreign | R core team | Data stored by non-R software | R Core Team (2023) |

| lattice | D. Sarkar | Lattice graphics | Sarkar (2008) |

| mgcv | S. Wood | Generalized Additive Models | S. N. Wood (2011), S. N. Wood (2017) |

| nlme | R core team | Linear and non-linear mixed effect models | Pinheiro and Bates (2000) |

| nnet | B. Ripley | Feed-forward neural networks | Venables and Ripley (2002) |

| rpart | B. Ripley | Partitioning and regression trees | Venables and Ripley (2002) |

| spatial | B. Ripley | Kriging and point pattern analysis | Venables and Ripley (2002) |

| Package | Maintainer | Topic(s) addressed by package | Author/Citation |

|---|---|---|---|

| asbio | K. Aho | Stats pedagogy and applied stats | Aho (2023) |

| car | J. Fox | General linear models | Fox and Weisberg (2019) |

| coin | T. Hothorn | Non-parametric analysis | Hothorn et al. (2006), Hothorn et al. (2008) |

| ggplot2 | H. Wickham | Tidyverse grid graphics | Wickham (2016) |

| lme4 | B. Bolker | Linear mixed-effects models | Bates et al. (2015) |

| plotrix | J. Lemonetal. | Helpful graphical ideas | Lemon (2006) |

| spdep | R. Bivand | Spatial analysis | Bivand, Pebesma, and Gómez-Rubio (2013), Pebesma and Bivand (2023) |

| tidyverse | H. Wickham | Data science under the tidyverse | Wickham et al. (2019) |

| vegan | J. Oksanen | Multivariate and ecological analysis | Oksanen et al. (2022) |

3.5.4 Accessing Package Information

Important information concerning a package can be obtained from the packageDescription() family of functions. Here is the version of the R contributed package asbio on my work station:

[1] '1.9.7'Here is the version of R used to build the installed version of asbio, and the package’s build date:

[1] "R 4.4.0; ; 2024-04-27 01:54:29 UTC; windows"

3.5.5 Accessing Datasets in R-packages

The command:

results in a listing of a datasets available in a session from within R packages loaded in a particular R session. Whereas the code:

results in a listing of a datasets available in a session from within installed R packages.

If one is interested in datasets from a particular package, for instance the package datasets, one could type:

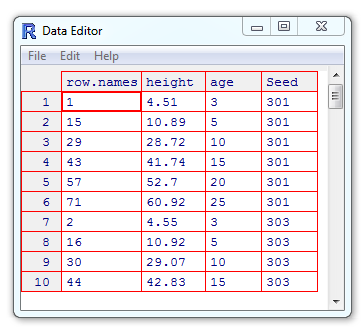

The dataset Loblolly in the datasets package contains height, age, and seed type records for a sample of loblolly pine trees (Pinus taeda). To access the data we can type:8

The data are now contained in a dataframe (called Loblolly) that we can manipulate and analyze.

[1] "nfnGroupedData" "nfGroupedData" "groupedData" "data.frame" Note that there are three classes (nfnGroupedData, nfGroupedData, groupedData) in addition to dataframe. These classes allow recognition of the nested structure of the age and Seed variables (defined to height is a function of age in Seed), and facilitates the analysis of the data using mixed effect model algorithms in the package nlme. We can get a general feel for the Loblolly dataset by accessing the first few rows using the function head():

Grouped Data: height ~ age | Seed

height age Seed

1 4.51 3 301

15 10.89 5 301

29 28.72 10 301

43 41.74 15 301

57 52.70 20 301The function summary() provides the mean and a conventional five number summary (minimum, 1st quartile, median, 3rd quartile, maximum) of both quantitative variables (height and age) and a count of the number of observations (six) in each level of the categorical variable Seed.

height age Seed

Min. : 3.46 Min. : 3.0 329 : 6

1st Qu.:10.47 1st Qu.: 5.0 327 : 6

Median :34.00 Median :12.5 325 : 6

Mean :32.36 Mean :13.0 307 : 6

3rd Qu.:51.36 3rd Qu.:20.0 331 : 6

Max. :64.10 Max. :25.0 311 : 6

(Other):48 R provides a spreadsheet-style data editor if one types fix(x), when x is a dataframe or a two dimensional array. For instance, the command fix(loblolly) will open the Loblolly pine dataframe in the data editor (Figure 3.1). When x is a function or character string, then a script editor is opened containing x. The data editor has limited flexibility compared to software whose main interface is a spreadsheet, and whose primary purpose is data entry and manipulation, e.g., Microsoft Excel\(^{\circledR}\). Changes made to an object using fix() will only be maintained for the current work session. They will not permanently alter objects brought in remotely to a session. The function View(x) (RStudio only) will provide a non-editable spreadsheet representation of a dataframe or numeric array.

Figure 3.1: The default R spreadsheet editor.

3.6 Facilitating Command Line Data Entry

Command line data entry is made easier with with several R functions. The function scan() speeds up data entry because a prompt is given for each data point, and separators are created by the function itself. Data entries can be separated using the space bar or line breaks. The scan() function will be terminated by a additional blank line or an end of file (EOF) signal. These will be Ctrl\(+\)D in Unix-alike operating systems and Ctrl\(+\)Z in Windows.

Below I enter the numbers 1, 2, and 3 as datapoints, separated by spaces, and end data entry using an additional line break. The data are saved as the object a.

Sequences can be generated quickly in R using the : operator

[1] 1 2 3 4 5 6 7 8 9 10or the function seq(), which allows additional options:

[1] 1 2 3 4 5 6 7 8 9 10[1] 1 3 5 7 9[1] 1 4 7 10Entries can be repeated with the function rep(). For example, to repeat the sequence 1 through 5, five times, I could type:

[1] 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5Note that the first argument in rep(), defines the thing we want to repeat and the second argument, 5, specifies the number of repetitions. I can use the argument each to repeat individual elements a particular number of times.

[1] 1 1 2 2 3 3 4 4 5 5We can use seq() and rep() simultaneously to create complex sequences. For instance, to repeat the sequence 1,3,5,7,9,11,13,15,17,19, three times, we could type:

[1] 1 3 5 7 9 11 13 15 17 19 1 3 5 7 9 11 13 15 17 19 1 3 5

[24] 7 9 11 13 15 17 193.7 Importing Data Into R

While it is possible to enter data into R at the command line, this will normally be inadvisable except for small datasets. In general it will be much easier to import data. R can read data from many different kinds of formats including .txt, and .csv (comma separator) files, and files with space, tab, and carriage return datum separators. I generally organize my datasets using Excel\(^{\circledR}\) or some other spreadsheet program (although R can handle much larger datasets than these platforms), then save them as .csv files. I then import the .csv files into R using the read.table(), read.csv(), or scan() functions. The function load() can be used to import data files in .rda data formats, or other R objects. Datasets read into R will generally be of class dataframe and data storage mode list.

3.7.1 Import Using read.table(), read.csv(), and scan()

The read.table() function can import data organized under a wide range of formats. It’s first three arguments are very important.

filedefines the name of the file and directory hierarchy which the data are to be read from.headeris a a logical (TRUEorFALSE) value indicating whetherfilecontains column names as its first line.seprefers to the type of data separator used for columns. Comma separated files use commas to separate data entries. Thus, in this casesep = ",". Tab separators are specified as"\t". Space separators are specified as spaces, specified as simply" ".

Other useful read.table() arguments include row.names, header, and na.strings. The specification row.names = 1 indicates that the first column in the imported dataset contains row names. The specification header = TRUE, the default setting, indicates that the first row of data contains column names. The argument na.strings = "." indicates that missing values in the imported dataset are designated with periods. By default na.strings = NA.

As an example of read.table() usage, assume that I want to import a .csv file called veg.csv located in folder called veg_data, in my working directory. The first row of veg.csv contains column names, while the first column contains row names. Missing data in the file are indicated with periods. I would type:

As before, note that as a legacy of its development under Unix, R locates files in directories using forward slashes (or doubled backslashes) rather than single Windows backslashes.

The read.csv() function assumes data are in a .csv format. Because the argument sep is unnecessary, this results in a simpler code statement.

The function scan() can read in data from an essentially unlimited number of formats, and is extremely flexible with respect to character fields and storage modes of numeric data

In addition to arguments used by read.table(), scan() has the arguments

whatwhich describes the storage mode of data e.g.,"logical", "integer", etc., or ifwhatis a list, components of variables including column names (see below), anddecwhich describes the decimal point character (European scientists and journals often use commas).

Assume that has a column of species names, called species, that will serve as the dataframe’s row names, and 3 columns of numeric data, named site1, site2, and site3. We would read the data in with scan using:

The empty string species = "" in the list comprising the argument what, indicates that species contains character data. Stating that the remaining variables equal 0, or any other number, indicates that they contain numeric data.

The easiest way to import data, if the directory structure is unknown or complex, is to use read.csv() or read.table(), with the file.choose() function as the file argument. For instance, by typing:

We can now browse for a .csv files to open that will, following import, be a dataframe with the name df. Other arguments (e.g., header, row.names) will need to be used, when appropriate, to import the file correctly.

Occasionally strange characters, e.g., ï.., may appear in front of the first header name when reading in files created in Excel\(^{\circledR}\) or other Microsoft applications. This is due to the addition of Byte Order Mark (BOM) characters which indicate, among other things, the Unicode character encoding of the file. These characters can generally be eliminated by using the argument fileEncoding="UTF-8-BOM" in read.table(), read.csv(), or scan().

3.7.2 Import Using RStudio

RStudio allows direct menu-driven import of file types from a number of spreadsheet and statistical packages including Excel\(^{\circledR}\), SPSS\(^{\circledR}\), SAS\(^{\circledR}\), and Stata\(^{\circledR}\) by going to File\(>\)Import Dataset. We note, however, that restrictions may exist, which may not be present for read.table() and read.csv(). These are summarized in Table 3.6.

| CSV or Text | Excel\(^{\circledR}\) | SAS\(^{\circledR}\), SPSS\(^{\circledR}\), Stata\(^{\circledR}\) | |

|---|---|---|---|

| Import from file system or URL | X | X | X |

| Change column data types | X | X | |

| Skip or include columns | X | X | X |

| Rename dataset | X | X | |

| Skip the first n rows | X | X | |

| Use header row for column names | X | ||

| Trim spaces in names | X | ||

| Change column delimiter | X | ||

| Encodingselection | X | ||

| Select quote identifiers | X | ||

| Select escape identifiers | X | ||

| Select comment identifiers | X | ||

Select NA identifiers |

X | X | |

| Specify model file | X |

3.7.3 Final Considerations

It is generally recommended that datasets imported and used by R be smaller than 25% of the physical memory of the computer. For instance, they should use less than 8 GB on a computer with 32 GB of RAM. R can handle extremely large datasets, i.e. \(> 10\) GB, and \(> 1.2 \times 10^{10}\) rows. In this case, however, specific R packages can be used to aid in efficient data handling. Parallel computing and workstation modifications may allow even greater efficiency. The actual upper physical limit for an R dataframe is \(2 \times 10^{31}-1\) elements. Note that this exceeds Excel\(^{\circledR}\) by 31 orders of magnitude (Excel 2019 worksheets can handle approximately \(1.7 \times 10^{10}\) cell elements). R also allows interfacing with a number relational database storage platforms. These include open source entities that express queries in SQL (Structured Query Language). For more information see and .

Exercises

- Create the following data structures:

- An atomic vector object with the numeric entries

1,2,3,4. - A matrix object with two rows and two columns with the numeric entries

1,2,3,4. - A dataframe object with two columns; one column containing the numeric entries

1,2,3,4, and one column containing the character entries"a","b","c","d". - A list containing the objects created in (b) and (c).

- Using

class(), identify the class and the data storage mode for the objects created in problems a-d. Discuss the characteristics of the identified classes.

- An atomic vector object with the numeric entries

- Assume that you have developed an R algorithm that saves hourly stream temperature sensor outputs greater than \(20^\text{o}\) from each day as separate dataframes and places them into a list container, because some days may have several points exceeding the threshold and some days may have none. Complete the following based on the list

hi.tempsgiven below:Combine the dataframes in

hi.tempsinto a single dataframe usingdo.call().Create a dataframe consisting of 10 sets of repeated measures from the dataframe

hi.temps$day2usingdo.call().

- Given the dataframe

boobelow, provide solutions to the following questions:Identify heights that are less than or equal to 80 inches.

Identify heights that are more than 80 inches.

Identify females (i.e.

F) greater than or equal to 59 inches but less 63 inches.Subset rows of

booto only contain only data for males (i.e.M) greater than or equal to 75 inches tall.Find the mean weight of males who are 75 or 76 inches tall.

Use

ifelse()orif()to classify heights equal to 60 inches as"small", and heights greater than or equal to 60 inches as"tall".

- Create

x <- NA,y <- NaN, andz <- NULL.- Test for the class of

xusingx == NAandis.na(x)and discuss the results. - Test for the class of

yusingy == NaNandis.nan(y)and discuss the results. - Test for the class of

zusingz == NULLandis.null(z)and discuss the results. - Discuss

NA,NaN, andNULLdesignations what are these classes used for and what do they represent?

- Test for the class of

- For the following questions, use data from Table 3.7 below.

- Write the data into an R dataframe called

plant. Use the functionsseq()andrep()to help. - Use

names()to find the names of the variables. - Access the first row of data using square brackets.

- Access the third column of data using square brackets.

- Access rows three through five using square brackets.

- Access all rows except rows three, five and seven using square brackets.

- Access the fourth element from the third column using square brackets.

- Apply

na.omit()to the dataframe and discuss the consequences. - Create a copy of

plantcalledplant2. Using square brackets, replace the 7th item in the 2nd column inplant2, anNAvalue, with the value12.1. - Switch the locations of columns two and three in

plant2using square brackets. - Export the

plant2dataframe to your working directory. - Convert the

plant2dataframe into a matrix using the functionas.matrix. Discuss the consequences.

- Write the data into an R dataframe called

| Plant height (dm) | Soil N (%) | Water index (1-10) | Management type |

|---|---|---|---|

| 22.3 | 12 | 1 | A |

| 21 | 12.5 | 2 | A |

| 24.7 | 14.3 | 3 | B |

| 25 | 14.2 | 4 | B |

| 26.3 | 15 | 5 | C |

| 22 | 14 | 6 | C |

| 31 | NA | 7 | D |

| 32 | 15 | 8 | D |

| 34 | 13.3 | 9 | E |

| 42 | 15.2 | 10 | E |

| 28.9 | 13.6 | 1 | A |

| 33.3 | 14.7 | 2 | A |

| 35.2 | 14.3 | 3 | B |

| 36.7 | 16.1 | 4 | B |

| 34.4 | 15.8 | 5 | C |

| 33.2 | 15.3 | 6 | C |

| 35 | 14 | 7 | D |

| 41 | 14.1 | 8 | D |

| 43 | 16.3 | 9 | E |

| 44 | 16.5 | 10 | E |

- Let:

\[\boldsymbol{A} = \begin{bmatrix}

2 & -3\\

1 & 0

\end{bmatrix}

\text{and } \boldsymbol{b} = \begin{bmatrix}

1\\

5

\end{bmatrix} \]

Perform the following operations using R:

- \(\boldsymbol{A}\boldsymbol{b}\)

- \(\boldsymbol{b}\boldsymbol{A}\)

- \(det(\boldsymbol{A})\)

- \(\boldsymbol{A}^{-1}\)

- \(\boldsymbol{A}'\)

- We can solve systems of linear equations using matrix algebra under the framework \(\boldsymbol{A}\boldsymbol{x} = \boldsymbol{b}\), and (thus) \(\boldsymbol{A}^{-1}\boldsymbol{b} = \boldsymbol{x}\). In this notation \(\boldsymbol{A}\) contains the coefficients from a series of linear equations (by row), \(\boldsymbol{b}\) is a vector of solutions given in the individuals equations, and \(\boldsymbol{x}\) is a vector of solutions sought in the system of models. Thus, for the linear equations:

\[\begin{aligned} x + y &= 2\\ -x + 3y &= 4 \end{aligned}\]

\[\boldsymbol{A} = \begin{bmatrix} 1 & 1\\ -1 & 3 \end{bmatrix}, \boldsymbol{ x} = \begin{bmatrix} x\\ y \end{bmatrix}, \text{ and } \boldsymbol{b} = \begin{bmatrix} 2\\ 4 \end{bmatrix}.\]

\[\boldsymbol{A}^{-1}\boldsymbol{b} = \boldsymbol{x} = \begin{bmatrix} 1/2\\ 3/2 \end{bmatrix}.\]

\[ \begin{aligned} 3x + 2y - z &= 1\\ 2x - 2y + 4z &= -2\\ -x + 0.5y -z &= 0 \end{aligned} \]

- Complete the following exercises concerning the R contributed package asbio:

- Install9 and load the package asbio for the current work session.

- Access the help file for

bplot()(a function in asbio). - Load the dataset

fly.sexfrom asbio. - Obtain documentation for the dataset

fly.sexand describe the dataset variables. - Access the column

longevityin fly.sex using the functionwith().

- Create .csv and .txt datasets, place them in your working directory, and read them into R.

References

Note that distinctions of these objects are not always clear or consistent. For instance, a

namesattribute can be given to elements of vectors and lists, and columns of dataframes. However, only names from dataframes and lists can be made visible usingattach, or called using$. See additional examples here.↩︎Matrices and arrays which, optimally, will both be numeric storage structures, cannot have a

namesattribute. Instead, row names and column names can be applied using the functionsrow.names()andcol.names(). These, however, cannot be made visible to search paths withattach()or called with$.↩︎All objects from a specific class can also be removed from a workspace. For example, to remove all dataframes, from a work session one could use:

rm(list=ls(all=TRUE)[sapply(mget(ls(all=TRUE)), class) == "data.frame"])↩︎The

numericclass is often used as an alias for classdouble. In fact,as.numeric()is identical toas.double(), andnumeric()is identical todouble()(Wickham 2019).↩︎All

numericobjects in R are stored with double-precision, and will require two adjacent locations in computer memory (see Ch 12). Numeric objects coerced to be integers (withas.intger()) will be stored with double precision, although one of the storage locations will not be used. As a result, integers are not conventional double precision data.↩︎Coercion can also be implemented using class generating functions described earlier. For instance,

data.frame(matrix(nrow = 2, data = rnorm(4)))converts a \(2 \times 2\) matrix into an equivalent dataframe.↩︎For instance,

subset(),split(), anddplyr::filter().↩︎This actually isn’t necessary since datasets in the package dataset default to being read into an R-session automatically. This step will be necessary, however, in order to obtain datasets from packages that are not lazy loaded (Ch 10).↩︎

Installation of packages while knitting of R Markdown or Sweave R code chunks is not allowed. Instead, one should install packages from the console. Required packages can (and should) be loaded while knitting once they are installed.↩︎